How To Use A Google Custom Search Engine For SEO Analysis [Case Study]

- SEO |

How To Use A Google Custom Search Engine For OnPage SEO Analysis

There's an interesting way to use a Google Programmable Search Engine (Custom Search) to figure out which of the Top 10 Google results has the best onpage SEO, compared to those that are ranking higher primarily from offpage SEO or other ranking factors (like Domain Age, User Engagement, etc.)

This can be helpful when you're trying to analyze or compare your content, so you know if you need to make it more relevant, or focus on other SEO ranking factors.

Google Programmable Search seems to "wash out" the effect of backlinks in it's results, so that you get a better picture of onpage SEO relevance.

Here's how you can test this:

Step 1: Do a search at Google.com for your keyword. In this example, we'll do a search for "how to catch an armadillo".

Step 2: Open a new tab and login to Google Programmable Search.

Step 3: Create a new Custom Search Engine and add the Top 10 organic URL listings from Step 1. (Note: Do not add more than 10, or the results start acting weird.)

Step 4: Repeat the same search using the Google Custom Search.

Step 5: Compare the position differences.

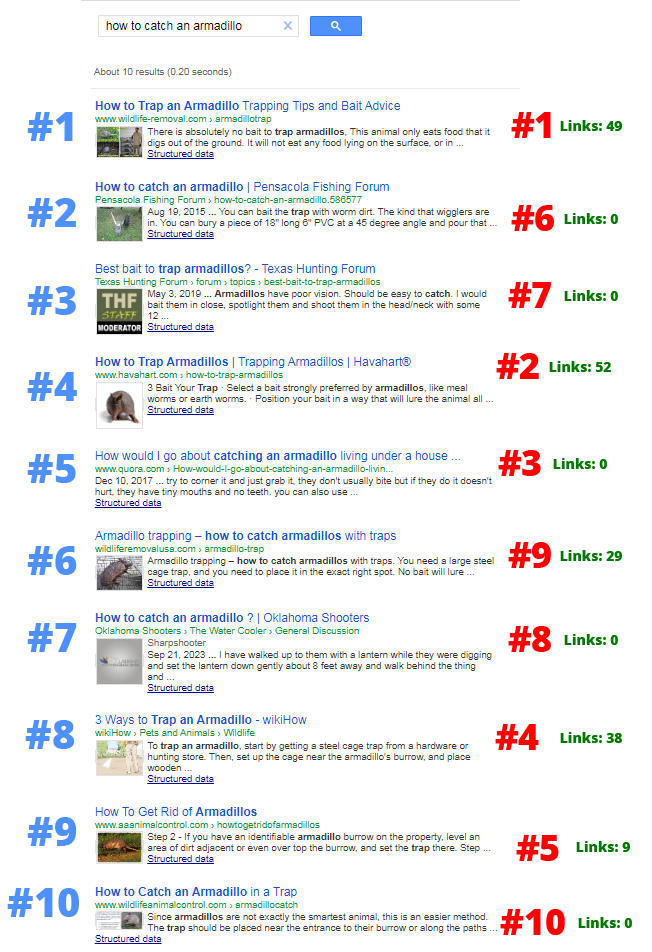

Now, in the image below, on the left side, you will see the ranking position for the Custom Search Results.

On the right side, you can see the normal Google.com search results. I have also added the number of backlinks pointing to that URL (data via SEMRush).

How to interpret these results...

The first result in Custom Search is also the first result at Google.com, so no changes there.

But, next we see that the #6 organic result from Google.com, is the #2 result in the Custom Search, even though it has 0 links.

It seems that if backlinks and other ranking factors were not included in the final ranking relevance score, this page is more relevant than the others.

From here, you can keep looking down and comparing all the differences...

How to use the analysis...

Well, you can use this method to study the most relevant pages based on content relevance, so that when you write your page, you can include the same important words, phrases, details, etc.

Also, when you finish writing your content and you publish it and it gets indexed, you can then come back and add your new page to the Custom Search, and then do a search to see if it outranks the other pages. If it does, it means your page is more relevant, based on onpage factors. At the point, you can begin to focus on external ranking factors and user engagement signals, to pull the rank up even higher.

Since it takes a little time, you probably only want to use this on keywords that you're really determined to rank #1 for. But, in those cases, this can be a helpful method to help you determine what your next steps should be, so you can get to the top.

All the best,

Jack Duncan

How To Reverse Engineer Keywords In Realtime...

How To Reverse Engineer Keywords In Realtime...